I have a natural urge to secure stuff to the point some might call paranoid. Maybe that’s why I ended up as a security engineer to make a living. But the harsh reality is that in a professional environment, one doesn’t always get the chance to fulfill these dreams of perfectly hardened and all-best-practices-applied tech.

Short-handed teams, business requirements, creeping technical debt from the early 2000s and other monsters under the bed sometimes step in the way and we gotta do with what we got, trying to make the most of it.

This is where the glorious homelab comes in. At home, I am the C{E,IS,T,F,P}O, NOC, SOC, DevOps, SecOps, whichever hat I want to wear. I get to make decisions and have the opportunity to implement things as they should be. Perfectly. From day one. As part of my quest for the ultimate hardened homelab, I like to imagine possible attack paths whenever I make a change to the infrastructure (or application, sometime when I get to my pet projects patiently waiting in the backlog).

The thinking goes like: let’s suppose I’m a threat actor and somehow I notice the commit message of the change about to be made. How can I exploit this information? What attacks should I focus my efforts on to achieve maximal lateral movement? How can I turn my insider knowledge into full network compromise?

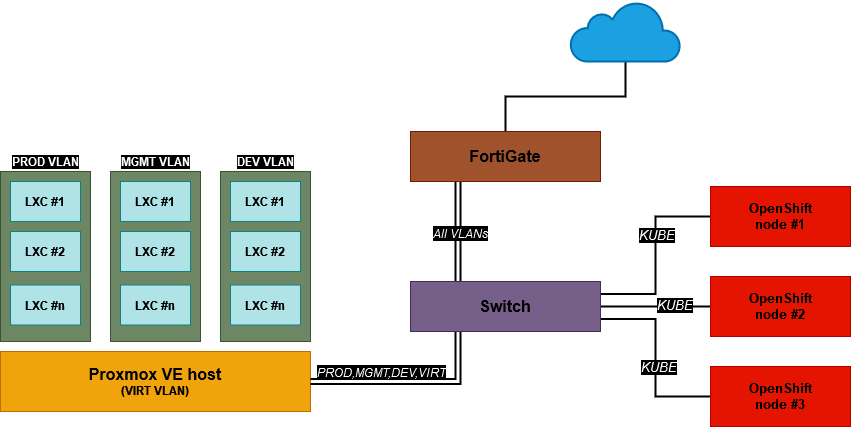

Let’s have an example: the other day I wanted to play around with OpenShift clusters. This required the setup of a DNS record like *.apps.lab.example.com, pointing to the ingress IP. Sadly, the FortiGate device I use as my DNS server doesn’t support wildcards. A public DNS provider was out of question as I wanted my cluster to function even during Internet outages (which happen quite often, thanks to Europe’s largest telco company which starts with a T and ends with “elekom”). The obvious solution is to deploy a new DNS server with something more flexible, like bind9 on Linux. But where to deploy it? Let’s take a look at the current (simplified) network topology:

Now we have quite a few options here (from recklessly insecure to tinfoil hat paranoid):

- on the Proxmox VE host

- a new LXC in MGMT VLAN

- a new VM in MGMT VLAN

- a new VM in KUBE VLAN

- a new dedicated server plugged in KUBE VLAN

The keyword here is isolation. We want to make lateral movement as hard as possible by isolating services from each other. But first, we gotta find out what to isolate this new service from. This is where the Crown Jewel Analysis comes in, which is the process of defining the organization’s most valuable assets and focusing defense efforts around protecting them.

For our home, these jewels happen to be located in the PROD VLAN, where the services are handling family photos, financial documents, health data and other stuff that we really don’t want to share with anyone. The DEV VLAN basically consists of ondemand, short-lived containers and VMs that I use for experimentation and don’t hold any sensitive data, nor secrets. The MGMT VLAN however, hosts our SaltStack master, which can execute commands on all agents, thus functioning as a backdoor to our whole network. The FortiGate’s and the switch’s admin interfaces are also reachable from this VLAN. So now we know that we must prevent an attacker from getting on the MGMT and PROD VLANs at all costs, especially the SaltStack master.

Let’s investigate the shortest path from zero to pwn3d in each deployment scenario. To focus on lateral movement this time, we suppose that the OpenShift cluster is already compromised (securing OpenShift is a whole another topic which might or might not get its own post in the future).

The only hole in the firewall from KUBE VLAN to any other local network is for the DNS server, so the threat actor would first need a BIND exploit. Such exploit (hopefully) does not exist at the moment for the most recent version, but it happened before ( CVE-2020-8625), and a new vulnerability might be introduced any time. But what happens if the DNS server is rooted? This is where the different levels of isolation matter very much.

DNS on PVE

If the DNS server resides on the PVE host, it’s instant game over. Our hypervisor has fallen and so did the Salt master and all other PROD services with it. For LXCs guests, it’s trivial to obtain the filesystem contents. The only thing that could reduce blast radius in this case is if our services were running in VMs with encrypted virtual disks. But even then, the attacker controls the host and could most likely take a memory dump of the KVM guest and extract disk encryption keys from memory. Compromising the Salt master would also mean access to any other hosts which might not be running on PVE at all. Not an ideal position to be in, unless you wanna practice your incident response skills while your whole digital life is fading to nothing.

DNS in LXC, MGMT VLAN

With this option, we have a bit more safety net. BIND’s LXC container is compromised, but the attacker can’t do anything meaningful yet. They would need to pivot to other hosts in MGMT or escape the container. I’m using unprivileged containers with AppArmor profiles, so it would be hard to escape it, but not impossible. All it takes is a kernel exploit or an AppArmor misconfiguration and bam! Hypervisor pwned, network pwned.

There’s also another possibility for lateral movement: all the management services listening on the network like a TP-Link web interface, FortiGate admin access, SSH servers and whatnot. Most of them are protected by host firewalls to prevent access, even from the same layer 2 domain, but some devices don’t allow such granular control. For example, if our adversary manages to exploit the switch’s web UI, they might grant themself access to other VLANs. They might also try layer 2 attacks like MAC flooding, but most of the traffic is TLS encrypted, so no straightforward exploit here.

Bottom line: lateral movement would require significant effort, but it’s not impossible. It would stop a script kiddie, but an APT would most likely find a way to compromise the network and put our nudes bird photos up for sale on the dark web.

DNS in VM, MGMT VLAN

Here we ditched containers for something with a bit more isolation: full blown virtual machines. The main difference is that now we’re running a separate kernel in the VM, while with LXC, the container shared its kernel with the host. Yes, we added extra protection, but also added overhead. Was it worth it? Doubt.

What we did is reduce the attack surface by not exposing the host kernel for our supposedly-compromised DNS server. But a KVM breakout is still not impossible, as seen in CVE-2021-29657. And we’re still in one of our most important VLANs, where part of our jewels are stored. Not the best tradeoff I’m afraid.

DNS in VM, KUBE VLAN

So why not put this untrusted DNS server together with its infected OpenShift siblings? We can deploy a host firewall which prevents connections to non-53 ports from cluster members so we won’t increase the attack surface by putting them in the same L2. But now our master hacker can’t reach any production hosts from the DNS server they rooted (of course we already have a deny all by default firewall between VLANs). The damage is localized, unless there’s a KVM breakout exploit.

DNS on dedicated server

To eliminate the KVM escape nightmare scenario, we could deploy a separate, physical server for DNS. For DNS only. This machine, even if it’s something really small like a Raspberry Pi, would spend most of it’s life idle. Meanwhile turtles, seals and polar bears would all cry in pain because of the energy wasted.

Risk management

Decision time! The security engineer (me) and the network architect (me) briefed management (also me) about the options and the risk vs. revard factors associated with them. They came to a conclusion that the possibility of a VM breakout is an accepted risk, because while consequences would be devastating, the chance of this happenning is quite slim.

Looking at the big picture, this attack path would require compromising an Internet exposed service on OpenShift, installing tools to discover the network and send crafted payloads, using a zero day against the DNS server (old exploits won’t work because security patches are automatically installed) and then doing the breakout magic. As an additional line of defense, DNS logs will also be sent to a SIEM which would alert for any funny business going on.